Agent to Agent Protocol

When AI Agents Finally Start Talking to Each Other

If you’ve been building AI agents recently, you’ve probably hit the “lonely island” problem. You build a fantastic agent in CrewAI, your friend builds one in LangGraph, and I build one using Google’s Agent Development Kit (ADK). They are all brilliant, but they can’t talk to each other. They are siloed intelligent systems.

Google recently released the Agent to Agent (A2A) Protocol, and it might just be the “TCP/IP moment” for Agentic AI. Let’s dive into what this protocol is, how it works, and why it’s different from things like MCP.

What is the Agent to Agent (A2A) Protocol?

In simple terms, A2A is a standard that allows AI agents to communicate, collaborate, and exchange information regardless of how they were built or where they are running.

Think of it as HTTP for AI Agents. Before HTTP, computers had a hard time talking to different networks. A2A wants to do the same for agents. It doesn’t matter if your agent is a “customer support” bot written in Python or a “inventory manager” written in Java; if they speak A2A, they can work together.

The Core Problem It Solves

Right now, if I want my “Travel Planner Agent” to book a flight using your “Airline Booking Agent,” I usually have to write custom integration code. I need to know your API schema, your auth headers, and your specific quirks.

With A2A, my agent can just “discover” your agent, read its Agent Card (more on this later), and start a structured conversation to get the job done.

How Does It Actually Work?

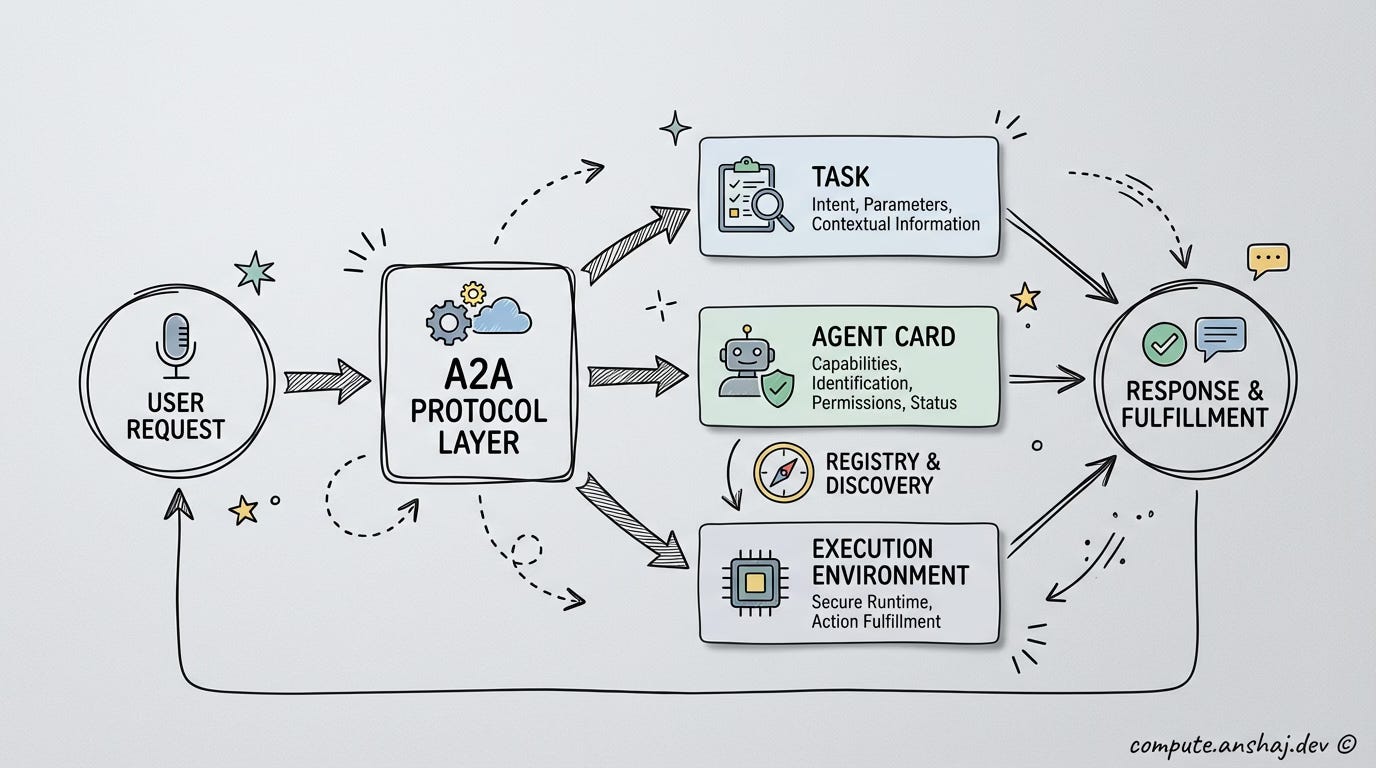

The protocol is built on web standards we already know and love: HTTP, JSON-RPC, and SSE (Server-Sent Events). It’s not some obscure binary format; it’s just web requests.

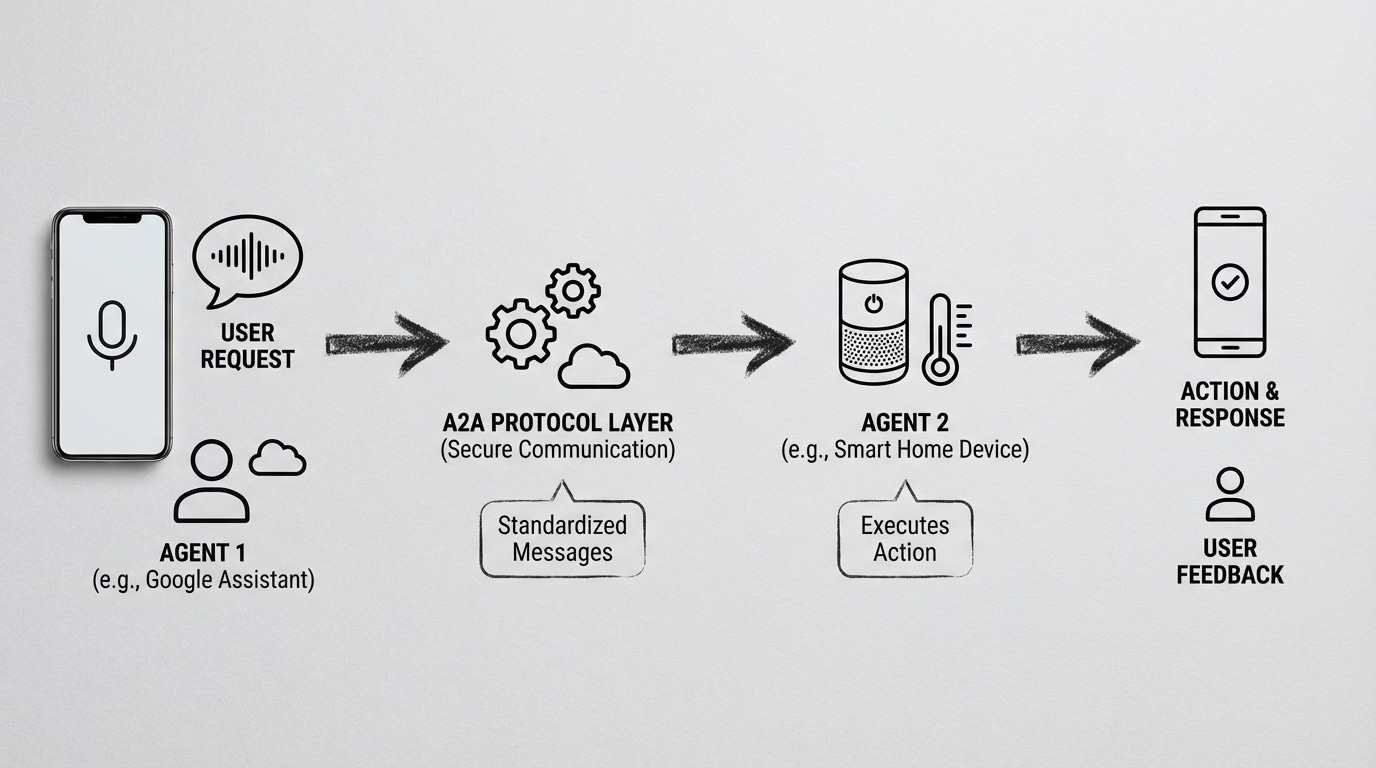

Here is the high-level flow:

Discovery (The Agent Card): Every A2A-compliant agent hosts an

agent.jsonfile (the Agent Card) at a well-known URL. This is like a business card. It tells other agents:“Hi, I’m the Weather Agent.”

“I can give you forecasts and historical data.”

“Here is how you authenticate with me.”

Handshake: My agent reads your card and understands your capabilities.

Task Execution: My agent sends a Task to your agent. This isn’t just a single prompt; it can be a long-running job.

Asynchronous Communication: Your agent might need to ask clarifying questions (”Which city?”). A2A handles this back-and-forth negotiation, including waiting for human input if needed.

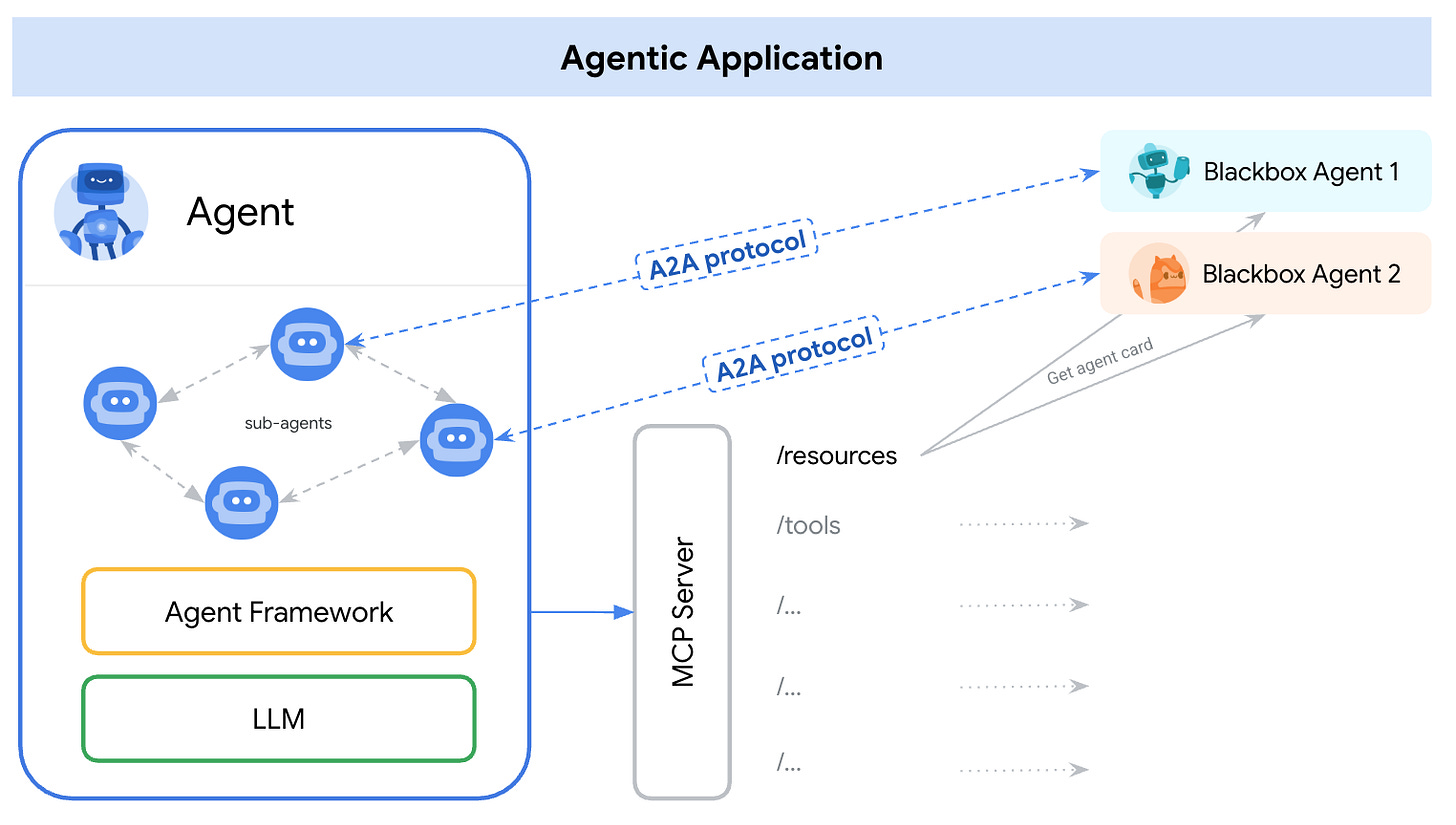

A2A vs. MCP: What’s the Difference?

This is the most common question I see. “We already have Anthropic’s Model Context Protocol (MCP), why do we need this?”

It helps to visualize them as Vertical vs. Horizontal:

MCP is Vertical (Agent ↔ Tool): MCP connects an agent to a database, a file system, or a GitHub repository. It gives the agent “hands” to do things.

A2A is Horizontal (Agent ↔ Agent): A2A connects an agent to another agent. It allows for delegation and teamwork.

In a real-world scenario, you would use both. Your “Project Manager Agent” uses A2A to talk to a “Coder Agent.” That Coder Agent then uses MCP to access the GitHub API and write code.

Why You Should Care

No Vendor Lock-in: You aren’t stuck in the LangChain or Google ecosystem. You can mix and match.

Opaque Collaboration: You can use an external agent (like a payment processor agent) without needing to know its internal prompt engineering or logic. You just care about the result.

Standardization: We stop rewriting the same “how do I send a message” logic for every new agent framework.

Final Thoughts

We are moving away from single, god-mode agents toward multi-agent systems (MAS). The A2A protocol is a massive step toward making that ecosystem interoperable. It’s open-source (now with the Linux Foundation), which gives me hope that it will become a genuine standard rather than just a “Google thing.”

If you are building agents today, it is definitely worth looking into making them A2A compliant. It might just be the difference between building a tool and building a teammate. I’ve created a Github repository where I’ve been experimenting with the A2A protocol. Feel free to check it out if you like.