Containers in software engineering

Learn the basics of docker & kubernetes in under 10 minutes

The why of containers

“It works on my machine, I’m not sure why it’s breaking in yours” is a problem that plagued developers for years. With the rise of agile, and quantifying developer’s productivity, it also became clear that such problems require solutions. If you cannot be sure that the software that runs on your machine will work on your fellow dev’s machine, you cannot be sure that it’ll work on production either.

This is one of the major problems that containers solved. Let’s understand this more. The problem of "it works on my machine" arises when an application behaves differently in different environments (e.g., development, testing, or production) due to discrepancies in dependencies, configurations, or operating system setups.

Containers effectively address this issue by providing a consistent and isolated runtime environment by

Encapsulating Dependencies

Containers package all the dependencies (libraries, frameworks, configuration files, etc.) an application needs to run. This ensures the application behaves the same way regardless of where it's deployed, as the container runs with its bundled dependencies.

Portability Across Platforms

Containers abstract away the underlying infrastructure by providing a consistent runtime. Whether it's running on a developer's laptop, a testing server, or a production environment, the container ensures uniform behavior.

Isolation

Containers run in isolated environments, meaning they are unaffected by the host system's configuration or other applications running on the same host. This eliminates conflicts like mismatched library versions or environmental variables.

Reproducibility

Containers are built using Dockerfiles or equivalent configuration files, which describe the environment setup in a version-controlled and reproducible manner. Teams can rebuild containers identically at any time, ensuring consistency across environments.

Simplified Onboarding

New developers or team members don’t need to replicate complex environment setups. They can simply use the containerized application, ensuring they work with the exact same environment as everyone else.

What are containers

Containers are lightweight, standalone, and executable packages of software that include everything needed to run an application: the code, runtime, libraries, and system tools. They provide a consistent computing environment, making applications portable across different systems.

What Containers do

Encapsulation: Bundle applications and their dependencies together.

Portability: Run consistently across various environments (development, testing, and production).

Isolation: Ensure that one container doesn't affect others running on the same host.

Efficiency: Use system resources effectively without the overhead of full virtualization (e.g., virtual machines).

What is Docker?

Docker is one of the many ways of building and running containers. It is one of the most popular and widely adopted containerization platforms, there are several alternatives that can build, run, and manage containers. Each has its own strengths and use cases. The reason docker is the first thing, people think of when talking about containers is that for a very long time, docker was the most popular choice for working with containers.

Docker is a great platform and toolset for creating, deploying, and managing containers. It simplifies containerization by providing:

A Dockerfile to define the container’s configuration.

A Docker Engine to build, run, and manage containers.

A Docker Hub for sharing and distributing container images.

Let’s see how a Dockerfile looks like

# Use an official Python runtime as a parent image

FROM python:3.9-slim

# Set the working directory inside the container

WORKDIR /app

# Copy the current directory's contents into the container

COPY . /app

# Install the Python dependencies

RUN pip install --no-cache-dir -r requirements.txt

# Expose port 5000 for the application

EXPOSE 5000

# Command to run the application

CMD ["python", "app.py"]Let’s understand an overview of what this script is doing

FROM: Specifies the base image (e.g.,python:3.9-slim) that the container will build upon.WORKDIR: Sets the working directory inside the container where commands will be executed.COPY: Copies files and directories from the host machine to the container.RUN: Executes a command during the build process, such as installing dependencies.EXPOSE: Declares the port on which the containerized application will listen.CMD: Defines the default command to run when the container starts, such as launching the application.

Now that we’ve seen how a Dockerfile looks like, let’s see how to build an image and run it

Building an image

Building a Docker image is the process of creating a container image by executing the instructions defined in a Dockerfile. The result is a self-contained package that includes the application, its dependencies, and any configurations needed to run it.

In our Dockerfile example above, the following commands in your shell will build and run the container

Build the container

docker build -t my-python-app .-t my-python-app: Tags the image with the namemy-python-app..Refers to the directory containing theDockerfile.

Run the container

docker run -p 5000:5000 my-python-app-p 5000:5000: Maps port5000of the container to port5000on the host.my-python-app: The name of the image to run.

Key things to remember

A Dockerfile is a script with instructions to build a container image.

Each instruction creates a new layer in the container, contributing to efficiency (layer caching).

It’s designed to be simple yet powerful, enabling consistent and reproducible environments.

Kubernetes - Managing a ton of containers

As systems scale, managing and deploying containers manually becomes challenging. You can have hundreds of containers running on hundreds of servers. There are some challenges that show up on scales like this

Orchestration difficulty: Applications often consist of multiple services (e.g., frontend, backend, database) running in many containers. Orchestrating these containers manually is cumbersome.

Scaling: Handling variable workloads requires scaling containers up or down dynamically, which is difficult to do without automation.

Availability: Ensuring that applications are always running, even if some containers or nodes fail, requires self-healing capabilities.

Load Balancing: Distributing traffic effectively across multiple instances of a service ensures reliability and efficiency.

Deployments: Rolling updates, canary deployments, and rollbacks are difficult to manage manually.

If Docker is the tool used to create and run containers, Kubernetes is the system that orchestrates and manages those containers at scale. Here’s a metaphor and a more technical explanation to clarify their relationship:

Containers vs. Kubernetes

Docker (Containers): Imagine containers as individual delivery trucks. Each truck is self-contained, carrying specific goods (an application and its dependencies). Docker is the tool that builds, loads, and drives these trucks.

Kubernetes (Orchestrator): Now imagine you manage a large logistics company with thousands of delivery trucks. Kubernetes is the system that plans routes, monitors truck conditions, schedules deliveries, ensures trucks aren’t idle, and reroutes trucks when one breaks down.

While Docker focuses on managing single containers, Kubernetes coordinates how all containers work together across multiple machines.

With Docker alone:

You manually start containers, stop them, and figure out how to connect them.

You’re responsible for scaling and handling failures.

With Kubernetes:

You define the system’s desired state (e.g., "run 5 instances of my app and scale if CPU usage exceeds 70%").

Kubernetes handles starting, scaling, load balancing, and restarting containers automatically.

Let’s get a bit more technical about Kubernetes

How Kubernetes Works

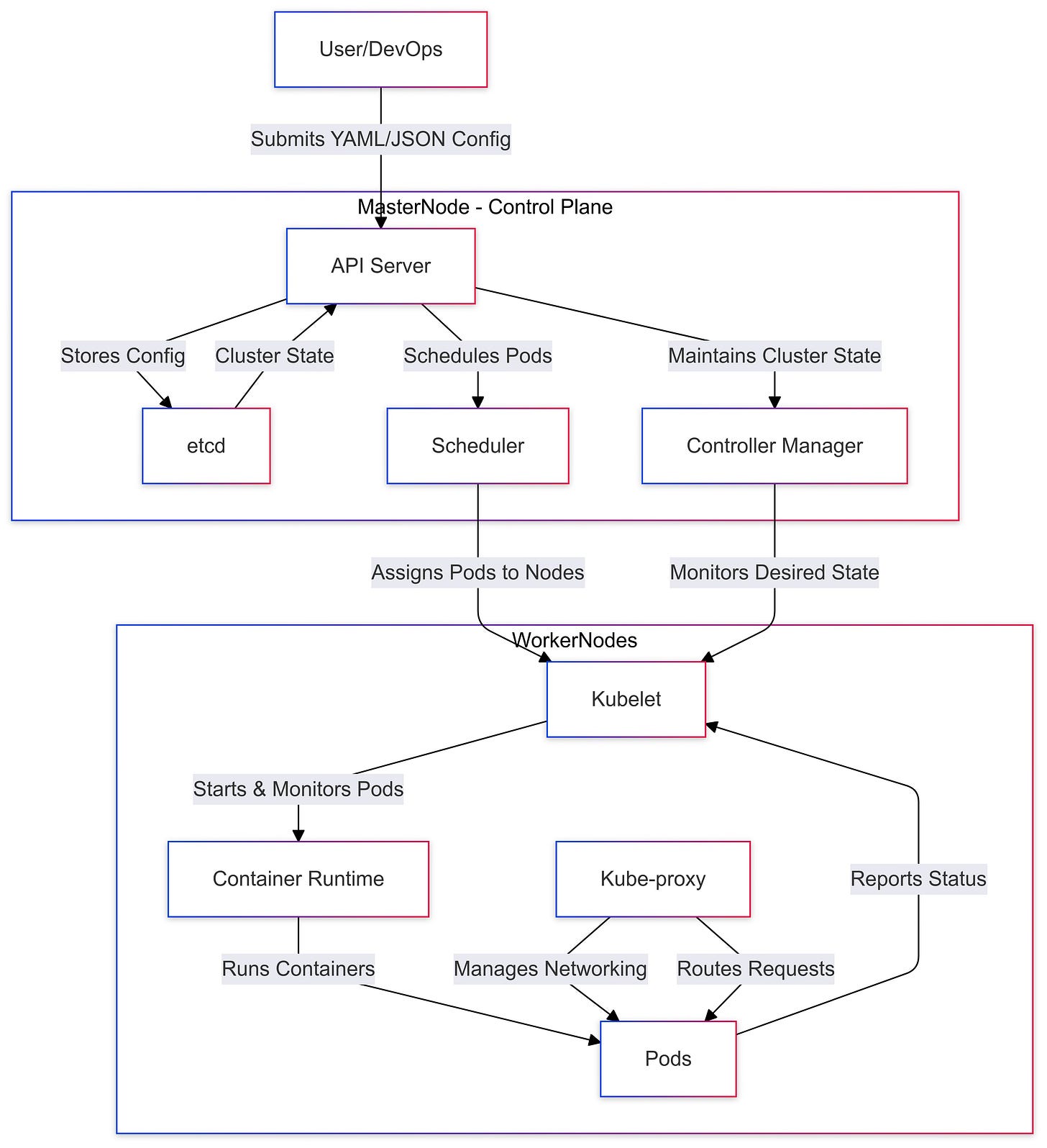

At its core, Kubernetes uses a cluster-based architecture. There is a master node and worker nodes, with their own sets of responsibilities

Master Node (Control Plane):

Responsible for managing the cluster, scheduling workloads, and maintaining the desired state.

Key components:

API Server: Central communication point between users and the cluster.

Scheduler: Assigns workloads to worker nodes.

Controller Manager: Ensures the cluster matches the desired state.

etcd: Stores all cluster data persistently.

Worker Nodes:

Run the actual containerized applications.

Key components:

Kubelet: Ensures containers are running as instructed by the control plane.

Kube-proxy: Manages networking and forwarding of requests to the correct containers.

Container Runtime: Runs containers (e.g., Docker, containerd).

Key Kubernetes Abstractions

Pods: The smallest deployable unit in Kubernetes, typically running one or more tightly coupled containers.

Services: Abstracts access to a group of pods, providing load balancing and stable networking.

Deployments: Manages the deployment and scaling of pods, ensuring the desired number of replicas are running.

Namespaces: Logical partitions within a cluster to separate resources and workloads.

ConfigMaps and Secrets: Manage application configuration and sensitive data securely.

Workflow

Declarative Configuration: You define the desired state of your application (e.g., run 3 replicas of a web server) in YAML or JSON.

Control Plane Actions:

The API server processes the configuration and stores it in

etcd.The scheduler assigns pods to suitable worker nodes.

The kubelet on each worker node ensures containers are running.

Dynamic Adjustments:

Kubernetes monitors the system and adjusts the cluster automatically, such as scaling pods up or down based on CPU usage.

Here’s a diagram to understand this process easily

Managed Kubernetes

Managed Kubernetes services provided by major cloud providers, such as Managed Kubernetes services provided by major cloud providers, such as Azure, AWS, and Google Cloud, offer fully managed, scalable, and secure Kubernetes clusters to simplify container orchestration.

Azure Kubernetes Service (AKS): Azure's managed Kubernetes service provides a simple, fast, and secure way to deploy and manage Kubernetes clusters. It handles tasks such as automated patching, scaling, and high availability, while allowing users to focus on application deployment and management. AKS integrates seamlessly with other Azure services, offering built-in monitoring, logging, and identity management through Azure Active Directory.

Amazon Elastic Kubernetes Service (EKS): AWS’s EKS is a highly available and scalable service that takes care of cluster management, including provisioning, patching, and scaling. EKS supports a wide range of integrations with AWS services such as IAM, CloudWatch, and Route 53, offering enhanced security and observability. It also ensures the control plane is automatically managed and fully resilient.

Google Kubernetes Engine (GKE): GKE is known for its seamless integration with Google Cloud services and its native support for Google’s deep Kubernetes expertise. GKE automates key aspects such as updates, scaling, and monitoring. It also integrates with Google’s advanced AI/ML tools, making it a strong choice for developers building modern, cloud-native applications.

All these services reduce the operational overhead of Kubernetes management, allowing developers to focus more on application development rather than cluster administration. They provide features like automatic scaling, high availability, and integration with the cloud provider's ecosystem, helping businesses achieve faster deployment and improved efficiency.

Now you know quite a bit about containers and their orchestration. In an upcoming article, I’ll be sharing a playground for you to experiment with these and gain a hands on experience without setting anything up on your computer. Till then, I hope you enjoyed reading this one. Have a wonderful day!

This article helps a lot in understanding docker and kubernetes for a beginner.

A beginner can kick-start their journey towards learning docker/kubernetes by giving this article a read :)